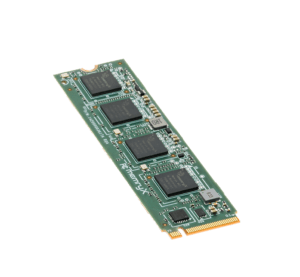

In a world where technology is constantly evolving, the MemryX MX3 M.2 AI Accelerator Module stands as a beacon of support for your AI-driven aspirations. Designed as a companion module, the MX3 M.2 Module significantly alleviates processing load of deep neural network (DNN) computer vision (CM) models from the host CPU. With its unique dataflow architecture, it ensures real-time, low latency inferencing while conserving system power, so you can achieve more without compromise.

Key Benefits

- Supports all common frameworks

- Dataflow architecture for ultra-low latency

- Advanced power management features

- Up to 80 million weight parameters

- On-chip storage of model parameters and matrix operators eliminates

the need for external DRAM - 2/4-lane PCIe Gen3 for up to 4GB/s bandwidth

- Supports multiple concurrent models

- Floating-point activations for high accuracy

- End user models can be deployed as-is without quantization, pruning,

compression, or retraining

Markets

- Automotive

- Computing Devices

- Industrial 4.0 & Robotics

- IoT

- Metaverse

- Smart Vision Systems